DRM and Other Forces Overriding the Three Laws of Robotics

By HUNG Chao-Kuei on Wednesday, August 4 2010, 07:12 - Permalink

[

Traduction français par Georges Khaznadar et Bastien Guerry]

[

Traduction français par Georges Khaznadar et Bastien Guerry]

[

Traducción al español por René Merou

[2013 中文更新版

]

Renowned Sci-Fi writer Isaac Asimov had the foresight to propose the Three Laws of Robotics more than half a centry ago. Is Homo Sapiens of the 21st century as a species wise enough to heed his advice and avoid our self-destruction by disobedient Robo Sapiens whose ancestors we are building today?

Globally, consumer rights and even human rights are eroding as some vendors infect their consumer electronics with Digital Restrictions Management mechanisms and as DMCA -- the law that tries to protect DRM -- is being secretly negotiated by a few strong countries and to be forced upon the rest of the world as treaties (ACTA 1, 2). These strong forces are overriding the three laws of robotics (if there ever will be at all) with something else.

As Asimov pictures it, a robot should give highest priority to (L1) protecting human beings (L2) obeying human orders (L3) protecting itself, in that order, above anything else. Any robot in Asimov's Sci-Fi is equipped with a positronic brain that will go nuts, so to speak, if it ever breaks these laws. This is to a society heavily dependent on robots what a fuse is to an electronic device. Imagine the threat one faces living in a world full of robots without these laws.

Except that you really don't have to imagine. We are living in such a world.

What Asimov didn't foresee was that the robots are eventually controlled by software rather than positronic circuits. That small departure of his prediction from what really happened later unfortunately blinds the majority of the computer science professionals (a population likely to enjoy Asimov's Sci-Fi, by the way).

First let's get law-breaking malware out of the way. Conceivably there will always be some people breaking (general) laws by creating bad robots, whether or not the society demands the three laws of robotics as part of the general law. What we are really interested in are the robots that are produced and operated conforming to the legal rules of the society.

Now consider a few examples of existing software violating the third and second laws of robotics. Suicidal software destroying itself with time bomb is a violation of (L3), and likely a violation of (L2), too. DRM is clearly a violation of (L2). Also search "viodentia fairuse4wm" or "kindle 1984" for some cases of software disobeying human orders.

Next, let's switch to Sci-Fi mode for a moment and consider what might happen in a few decades from now. Suppose your grandson buys a medical care robot to take care of your cardiac pacing problem by watching and adjusting some implanting medical device. You feel worse after the robot starts taking care of you, and suspect that the robot is not working correctly. You have even heard of instances of death possibly related to this model of robot.

Or suppose that the nursing house where you live buys a medical care robot to perform regular physical therapy for or even operations on its inhabitants. A new and safer way of performing these functions has been spreading on the internet, and all of your housemates including you are eager to adopt it.

In either case, your grandson finds a capable robot expert to examine the robot and make modifications to its positronic brain or to its software or to whatever, so as to remove its deficiency and/or improve its service, hence your life quality, or even to save you from "death by buggy code". The expert asks the robot to expose and explain its circuit/software design, and to help the expert examine and upgrade its circuit/software.

In response, a robot in Asimov's world would undoubtedly comply (maybe after verifying the expert's claimed profession) according to (L1) and (L2). But what would a robot in our present world do? You know, our real world, whose inhabitants are largely ignorant of the three laws of robotics and, simultaneously, have largely been brain washed by the intellectually "property" propaganda, the world in which the intellectually "property" right is about to override the physical property right. Is the robot more likely to comply, or is it more likely to refuse to cooperate, citing protection of copyright as the highest priority (L0), destroying itself and sending DMCA notices as it is being forcefully shut down by the expert, and willfully defying (L1), (L2), and (L3) all at once?

And all this is not that far fetched.

Medtronic's Implantable Medical Devices would do that if it could.

Sony's robotic dog Aibo would do that if it could.

And it doesn't matter that they can never possibly

do it perfectly thru technological means.

The manufacturers may very well (or did already) invoke

the all mighty DMCA to cover up its bad technical choices that ignore

the

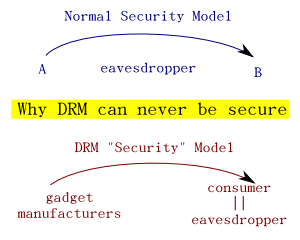

Kerckhoffs' principle regarding computer security. (In

non-technical terms, in

Chinese, in a mathematical metaphor

"squaring the circle" or in picture as shown to the right).

And all this is not that far fetched.

Medtronic's Implantable Medical Devices would do that if it could.

Sony's robotic dog Aibo would do that if it could.

And it doesn't matter that they can never possibly

do it perfectly thru technological means.

The manufacturers may very well (or did already) invoke

the all mighty DMCA to cover up its bad technical choices that ignore

the

Kerckhoffs' principle regarding computer security. (In

non-technical terms, in

Chinese, in a mathematical metaphor

"squaring the circle" or in picture as shown to the right).

We are living in a world where Asimov's proposed Three Laws of Robotics are not only ignored and dismissed but in fact increasingly suppressed by problematic laws -- DMCA and ACTA. We are putting ourselves at the mercy of the robots whose defiant behaviors against us are protected by these laws.

Is there any chance to change the future for the better where our lives are increasingly dependent on various forms of defiant "robots"? I don't know how you interpret Asimov's Psychohistory, but to me, bringing the non-technical, non-computer-savvy general public into awareness of the imminent dangers is a feaible and important way to change the course of the future.

The saddest aspect of life right now is that science gathers knowledge faster than society gathers wisdom. -- Issac Asimov

Maybe it's time to start writing and collecting Sci-Fi's on such topics as "Robots and DRM" or "Robots and Copyright". People may learn more about these dangers from stories happening in a future society, in a distant planet, or in an alternative universe, because:

It is the invariable lesson to humanity that distance in time, and in space as well, lends focus. It is not recorded, incidentally, that the lesson has ever been permanently learned. -- Issac Asimov, "Foundation and Empire" Chapter 13

![[rss feed 圖案]](/~ckhung/i/rss.png)

Comments

please replace, in the picture, "secutiry" with "security" . thank you

No mention of predator drone strikes? (L1) ;_;

Asimov's Three Laws of Robotics are literature, art. Perhaps they've also value in real life or are useful as philosophical concepts in real life but they served primarily to allow Asimov to construct numerous stories where he could explore the boundaries and the exceptions.

The problem with this theory is that robots capable of performing the services needed at that technological level will require artificial intelligence, not simple Turing-complete code-driven state machines. They won't be programmed by software engineers but rather negotiated with and contractually bound through lawyers (a truly scarier scenario than just some rule-rejecting app in an embedded system).

This post's comments feed